SynSense | Cars That Think Like You- EETimes

Cars That Think Like You – EETimes

By Sally Ward-Foxton 07.22.2022

Car makers are checking out neuromorphic technology to implement AI–powered features such as keyword spotting, driver attention, and passenger behavior monitoring.

Imitating biological brain processes is alluring because it promises to enable advanced features without adding significant power draw at a time when vehicles are trending towards battery–powered operation. Neuromorphic computing and sensing also promise benefits like extremely low latency, enabling real–time decision making in some cases. This combination of latency and power efficiency is extremely attractive.

Here’s the lowdown on how the technology works and a hint on how this might appear in the cars of the future.

SPIKING NETWORKS

The truth is there are still some things about how the human brain works that we just don’t understand. However, cutting–edge research suggests that neurons communicate with each other by sending electrical signals known as spikes to each other, and that the sequences and timing of spikes are the crucial factors, rather than their magnitude. The mathematical model of how the neuron responds to these spikes is still being worked out. But many scientists agree that if multiple spikes arrive at the neuron from its neighbors at the same time (or in very quick succession), that would mean the information represented by those spikes is correlated, therefore causing the neuron to fire off a spike to its neighbor.

This is in contrast to artificial neural networks based on deep learning (mainstream AI today) where information propagates through the network at a regular pace; that is, the information coming into each neuron is represented as numerical values and is not based on timing.

Making artificial systems based on spiking isn’t easy. Aside from the fact we don’t know exactly how the neuron works, there is also no agreement on the best way to train spiking networks. Backpropagation — the algorithm that makes training deep learning algorithms possible today — requires computation of derivatives, which is not possible for spikes. Some people approximate derivatives of spikes in order to use backpropagation (like SynSense) and some use another technique called spike timing dependent plasticity (STDP), which is closer to how biological brains function. STDP, however, is less mature as a technology (BrainChip uses this method for one–shot learning at the edge). There is also the possibility of taking deep learning CNNs (convolutional neural networks), trained by backpropagation in the normal way, and converting them to run in the spiking domain (another technique used by BrainChip).

SYNSENSE SPECK

SynSense is working with BMW to advance the integration of neuromorphic chips into smart cockpits and explore related fields together. BMW will be evaluating SynSense’s Speck SoC, which combines SynSense’s neuromorphic vision processor with a 128 x 128–pixel event–based camera from Inivation. It can be used to capture real–time visual information, recognize and detect objects, and perform other vision–based detection and interaction functions.

“When BMW replaces RGB cameras with Speck modules for vision sensing, they can replace not just the sensor but also a significant chunk of GPU or CPU computation required to process standard RGB vision streams,” Dylan Muir, VP global research operations at SynSense, told EE Times.

Using an event–based camera provides higher dynamic range than standard cameras, beneficial for the extreme range of lighting conditions experienced inside and outside the car.

BMW will explore neuromorphic technology for car applications, including driver attention and passenger behavior monitoring with the Speck module.

“We will explore additional applications both inside and outside the vehicle in coming months,” Muir said.

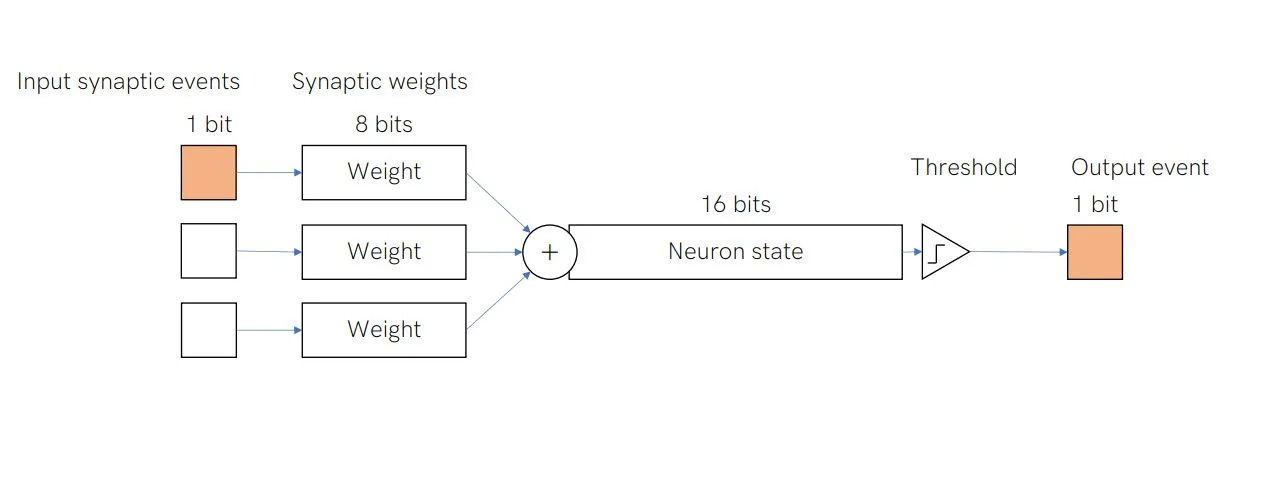

SynSense’s neuromorphic vision processor has a fully asynchronous digital architecture. Each neuron uses integer logic with 8–bit synaptic weights, a 16–bit neuron state, 16–bit threshold, and single–bit input and output spikes. The neuron uses a simple integrate–and–fire model, combining the input spikes with the neuron’s synaptic weights until the threshold is reached, when the neuron fires a simple one–bit spike. Overall, the design is a balance between complexity and computational efficiency, Muir said.

SynSense’s electronic neuron is based on the integrate–and–fire model. (Source: SynSense)

SynSense’s digital chip is designed for processing event–based CNNs, with each layer processed by a different core. Cores operate asynchronously and independently; the entire processing pipeline is event driven.

“Our Speck modules operate in real–time and with low latency,” Muir said. “We can manage effective inference rates of >20Hz at <5mW power consumption. This is much faster than what would be possible with traditional low–power compute on standard RGB vision streams.”

While SynSense and BMW will be exploring neuromorphic car use cases in the “smart cockpit” initially, there is potential for other automotive applications, too.

“To begin with we will explore non–safety–critical use cases,” Muir said. “We are planning future versions of Speck with higher resolution, as well as revisions of our DynapCNN vision processor that will interface with high–resolution sensors. We plan that these future technologies will support advanced automotive applications such as autonomous driving, emergency braking, etc.”