SynSense and Prophesee are partnering to develop a single-chip, event-based image sensor integrating Prophesee’s Metavision image sensor with Synsense’s DYNAP-CNN neuromorphic processor. The companies will collaborate on design, development, manufacture and commercialization of the combined sensor-processor, aiming to produce ultra-low power sensors that are both small and inexpensive.

“We are not a sensor company, we are a processor company,” Dylan Muir, SynSense’s senior director of global business development, algorithms and applications, told EE Times. “Because we’re looking at low-power sensor processing, the closer we can put our hardware to the sensor, the better. So partnering with event-based vision sensor companies makes a lot of sense.”

SynSense previously combined its spiking neural network processor IP with a dynamic vision sensor from Inivation. (Source: SynSense)

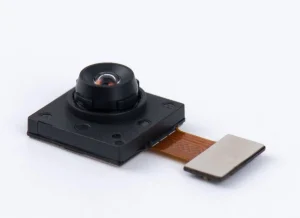

SynSense also works with event-based image sensor company Inivation, with whom it has developed a 128-by-128 resolution event-based camera module called Speck.

“We plan to move in the direction of higher resolution pixel arrays with Prophesee,” Muir said. Noting earlier collaboration with Sony, Muir also cited Prophesee’s expertise in achieving low-light sensitivity as an advantage. “In the long term, we’re looking at being able to do high-resolution vision processing in the device, in a very compact module, and this is more complex than just scaling everything up,” Muir said.

SynSense/Inivation Speck camera module. (Source: SynSense)

A higher resolution sensor array occupies more space and requires more processing, so processor cores must be bigger. Muir said silicon requirements for a high-quality image sensor do not fit with requirements for compact digital logic. Hence, a stacked architecture or multi-chip solution bonded back-to-back seem the most likely solutions.

Algorithmic work will also be required for a higher-resolution sensor; currently smaller pixel arrays are processed by a single convolutional neural network (CNN). Higher resolution would mean a huge CNN. Alternatively, an image could be broken into tiles and run on multiple CNNs in parallel, or just one region of the image could be examined. Work is ongoing, Muir said.

Event-based vision

Event-based vision sensors like Prophesee’s focus not on images but on changes among video frames. The technique is based on how the human eye records and interprets visual inputs, drastically reducing the amount of data produced–and it is more effective in low-light scenarios. It can also be implemented with much less power than other image sensors.

Prophesee’s event-based Metavision sensors feature embedded intelligence in each pixel, enabling each to activate independently, thereby triggering an event.

SynSense’s mixed-signal processor for low dimensionality signal processing (audio, bio signals, vibration monitoring) consumes less than 500 µW. SynSense had no immediate plans to commercialize its technology, and on-chip resources were not sufficient for running a CNN, a requirement for vision processing. It developed a second, digital architecture tailored for convolutional networks. That IP that will be integrated with the Prophesee sensor.

Moving to a fully asynchronous digital architecture also meant the design could transition to more advanced process technology while consuming less power.

The processor IP consists of a spiking convolutional core tailored to event-based versions of CNNs. SynSense uses training based on back-propagation for spiking neural networks. According to Muir, that approach boosts the processing of temporal signals beyond standard CNNs converted to run in the event domain. Back-propagation is achieved by approximating the derivative of a spike during training. By contrast, inference is purely spike-based.

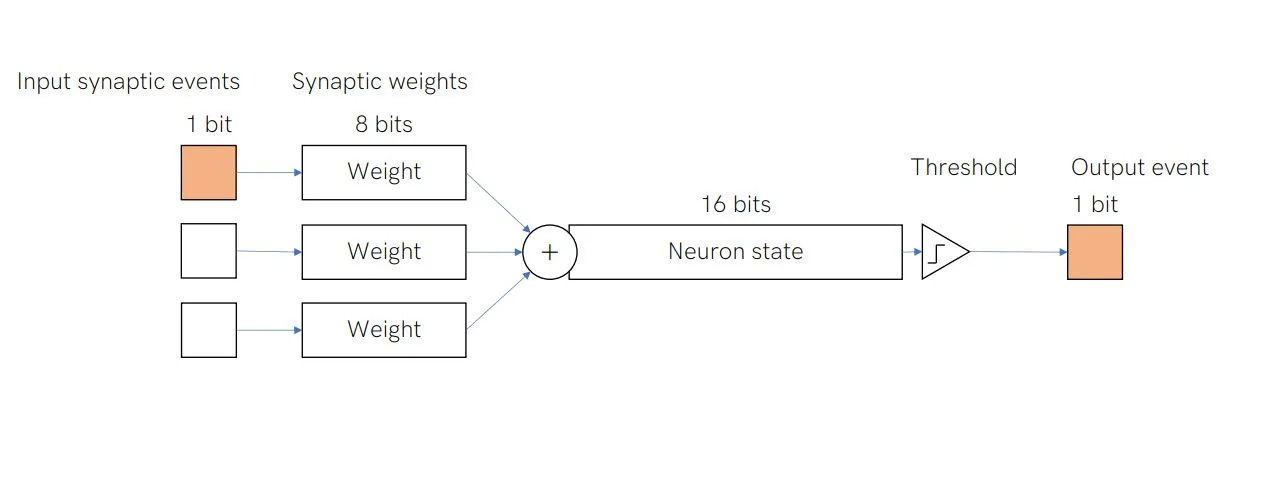

SynSense’s spiking neuron uses integer logic with 8-bit synaptic weights, a 16-bit neuron state, 16-bit threshold and single-bit input and output spikes. The neuron is simply “integrate and fire,” the simplest neuron model (compared to more complex models such as “leaky integrate and fire,” the internal state of the simpler version does not decay when there is no input, reducing computational requirements). The SynSense neuron adds an 8-bit number to a 16-bit number, then compares it to the 16-bit threshold.

“It was somewhat surprising to us at the beginning that we could cut down the neuron design to this degree of simplicity and have it perform really well,” Muir said.

SynSense’s digital binary asynchronous neuron uses a simple “integrate and fire” design. (Source: SynSense)

SynSense’s digital chip is tailored to CNN processing, and each CNN layer is processed by a different processor core. Cores operate asynchronously and independently; the entire processing pipeline is event driven. In a demonstration of system monitoring the intention to interact (whether or not the user was looking at the device), the SynSense stack processed inputs with latency below 100 ms and with less than 5 mW dynamic power consumed by sensor and processor.

SynSense has taped out several iterations of its processor core, with the Speck sensor ready for commercialization in real-time vision sensing applications such as smartphones and smart home devices. The camera’s 128-by-128 resolution is sufficient for short-range, in-home applications (outdoor applications such as surveillance would require higher resolution).

SynSense was founded in 2017, spun out from the University of Zurich. The company has about 65 employees spread across an R&D office in Zurich, a systems and product engineering base in Chengdu, China, and an IC design team in Shanghai. It recently closed a Pre-B funding round that included investments from Westport Capital, Zhangjiang Group, CET Hik, CMSK, Techtronics, Ventech China, CTC Capital and Yachang Investments (SynSense declined to provide the funding amount).

A hardware development kit for the event-based vision processor is available now for gesture recognition, presence detection and intent-to-interact applications in smart home devices. Samples of the vision processor itself, a developer kit for audio and IMU processing, samples of the Speck camera module and the Speck module development kit will be available by the end of 2022.