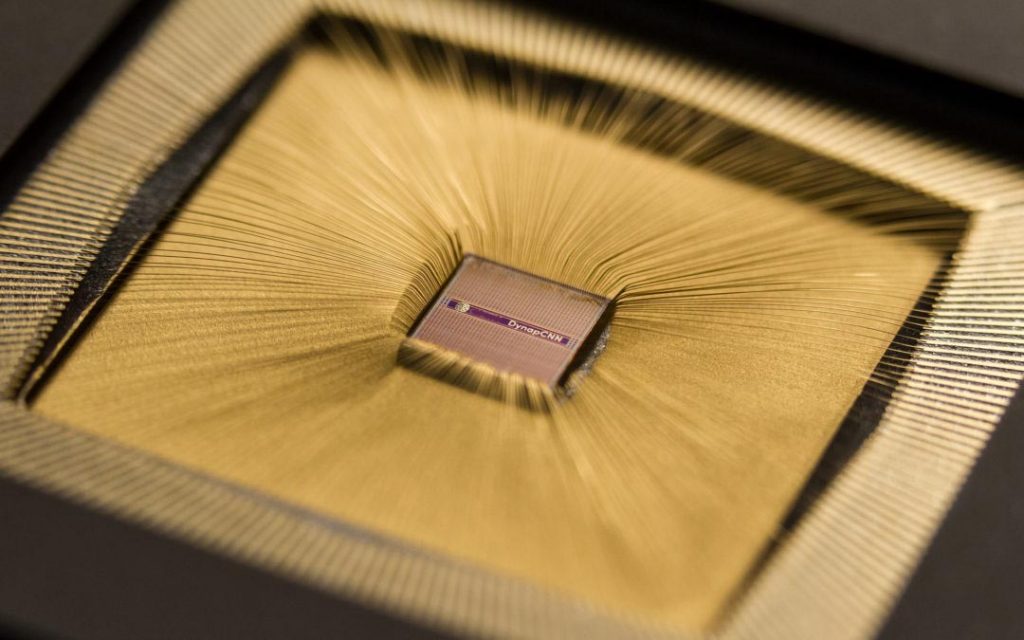

DYNAP-CNN — the World’s First 1M Neuron, Event-Driven Neuromorphic AI Processor for Vision Processing

Today we are announcing our new fully-asynchronous event-driven neuromorphic AI processor for ultra-low power, always-on, real-time applications.

DYNAP-CNN opens brand-new possibilities for dynamic vision processing, bringing event-based vision applications to power-constrained devices for the first time.

DYNAP-CNN is a 12mm2 chip, fabricated in 22nm technology, housing over 1 million spiking neurons and 4 million programmable parameters, with a scalable architecture optimally suited for implementing Convolutional Neural Networks. It is a first of its kind ASIC that brings the power of machine learning and the efficiency of event-driven neuromorphic computation together in one device. DYNAP-CNN is the most direct and power-efficient way of processing data generated by Event-Based and Dynamic Vision Sensors.

As a next-generation vision processing solution, DYNAP-CNN is 100–1000 times more power efficient than the state of the art, and delivers 10 times shorter latencies in real-time vision processing.

Those savings in energy mean that applications based on DYNAP-CNN can be always-on, and crunch data locally on battery powered, portable devices.

Computation in DYNAP-CNN is triggered directly by changes in the visual scene, without using a high-speed clock. Moving objects give rise to sequences of events, which are processed immediately by the processor. Since there is no notion of frames, DYNAP-CNN’s continuous computation enables ultra-low-latency of below 5ms. This represents at least a 10x improvement from the current deep learning solutions available in the market for real-time vision processing.

DYNAP-CNN Development Kits will be available in Q3 2019.